The Musings and Findings of Software Consultant David Klein (Sydney, Australia)

Wednesday, 28 July 2010

Using SharePoint 2007 and SharePoint 2010 with Encrypted Databases

Nothing!

As the name of the feature suggests, you simply have to set it up on the SQL Server side (as per http://technet.microsoft.com/en-us/library/bb934049(SQL.100).aspx), and your underlying database files (and SharePoint Content) and any backups thereof will be encrypted without any extra effort on your part.

DDK

Monday, 10 August 2009

SQL Server Integration Services - How to check for the existence of an Excel Worksheet in SSIS

public class ScriptMain

{

public void Main()

{

string fileToTest;

string tableToTest;

string connectionString;

OleDbConnection excelConnection;

DataTable excelTables;

string currentTable;

fileToTest = Dts.Variables["ExcelFile"].Value.ToString();

tableToTest = Dts.Variables["ExcelTable"].Value.ToString();

Dts.Variables["ExcelTableExists"].Value = false;

if (File.Exists(fileToTest))

{

connectionString = "Provider=Microsoft.Jet.OLEDB.4.0;" +

"Data Source=" + fileToTest + ";Extended Properties=Excel 8.0";

excelConnection = new OleDbConnection(connectionString);

excelConnection.Open();

excelTables = excelConnection.GetSchema("Tables");

foreach (DataRow excelTable in excelTables.Rows)

{

currentTable = excelTable["TABLE_NAME"].ToString();

if (currentTable == tableToTest)

{

Dts.Variables["ExcelTableExists"].Value = true;

}

}

}

Dts.TaskResult = (int)ScriptResults.Success;

}

}

For more information, see http://technet.microsoft.com/en-us/library/ms403358.aspx#example1

Tuesday, 21 July 2009

Fix SSIS 2008 Error - The conversion returned status value 2 and status text "The value could not be converted because of a potential loss of data."

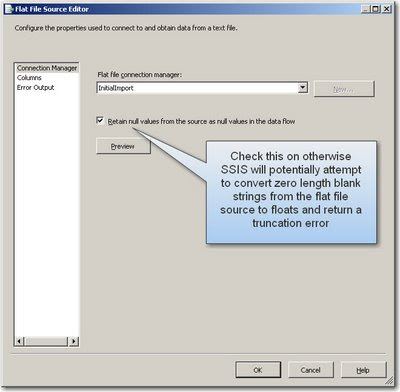

When importing a datasource from a flat file today (a csv), (which ran through a Derived Column Transformation and then a Data Conversion Transformation before hitting SQL), I hit the following error in SQL Server Integration Services 2008 (SSIS 2008):

"Data conversion failed while converting column .....The conversion returned status value 2 and status text "The value could not be converted because of a potential loss of data."

The dataviewers and error output SSIS debugging techniques didn't show anything unusual in the data as it was transformed. In addition, this problem only occurred on my float columns, and only when the data in the columns is blank. I did some quick checks to see if there were a few extra spaces in some of the fields and did a TRIM() for the derived column - but the same error kept coming up. I even enabled "Ignore truncation" and it still didn't work.

Information: 0x402090DE at ImportTemplateData, Flat File Source [2917]: The total number of data rows processed for file "C:\WorkforceProfileInitialImport\Q2_2008_162_Data.csv" is 1050.

Error: 0xC02020C5 at ImportTemplateData, Data Conversion [4034]: Data conversion failed while converting column "[8c Override Census Period FTE]" (2050) to column "Copy of [8c Override Census Period FTE]" (5338). The conversion returned status value 2 and status text "The value could not be converted because of a potential loss of data.".

Error: 0xC0209029 at ImportTemplateData, Data Conversion [4034]: SSIS Error Code DTS_E_INDUCEDTRANSFORMFAILUREONERROR. The "output column "Copy of [8c Override Census Period FTE]" (5338)" failed because error code 0xC020907F occurred, and the error row disposition on "output column "Copy of [8c Override Census Period FTE]" (5338)" specifies failure on error. An error occurred on the specified object of the specified component. There may be error messages posted before this with more information about the failure.

Error: 0xC0047022 at ImportTemplateData, SSIS.Pipeline: SSIS Error Code DTS_E_PROCESSINPUTFAILED. The ProcessInput method on component "Data Conversion" (4034) failed with error code 0xC0209029 while processing input "Data Conversion Input" (4035). The identified component returned an error from the ProcessInput method. The error is specific to the component, but the error is fatal and will cause the Data Flow task to stop running. There may be error messages posted before this with more information about the failure.

Information: 0x40043008 at ImportTemplateData, SSIS.Pipeline: Post Execute phase is beginning.

The underlying issue was that it was trying to convert these blank strings (from the flat file source datatype) into the float datatype and was thereforce constantly failing. The fix is to tick on the "Retain null values from the source as null values in the data flow" and the package then started to run successfully for those columns which had just blank values.

Wednesday, 20 August 2008

Violation of PRIMARY KEY constraint 'PK_PrimaryKeyName'. Cannot insert duplicate key in object 'dbo.TableName'

System.Data.SqlClient.SqlException. ...

Violation of PRIMARY KEY constraint 'PK_PrimaryKeyName'. Cannot insert duplicate key in object 'dbo.TableName'.

Even when attempting to insert data directly into the table via SQL Management Studio, the same error would occur. The source of the issue was that the identity seed values were out of sync with the actual values in the table (a result of doing inserts with IDENTITY_INSERT ON). The simple fix was to change to output text mode in SQL management studio and run the T-SQL query:

SELECT 'DBCC CHECKIDENT (' + Table_Name + ')' FROM information_schema.tables WHERE TABLE_TYPE = 'BASE TABLE'

Run the output of this query - this corrected all the 'duplicate key' issues I was having after the deploy of the database scripts.

Tuesday, 12 August 2008

SQL Reporting Services Error: Logon failed. (rsLogonFailed) Logon failure: unknown user name or bad password. (Exception from HRESULT: 0x8007052E)

I've run into this SQL Reporting Services 2005 exception a couple of times now - typically when I have reporting services execution accounts run as domain users that have passwords which expire. You will get this error if the current credentials supplied for the report (or if none supplied, the current SQL Reporting Services Execution Account) are incorrect. This happened on my local machine and the simple fix was to update the password on my execution account.

Corrected the account username/pass... fixed!

Monday, 19 May 2008

Simple Auditing with LINQ to SQL - Date, Time and User Stamps

There are many ways you can populate application audit tables or audit fields. These include:

- With Triggers (but this relies on the SQL authenication mechanism for accurate user data);

- With stored procedures (this also relies on the accuracy of SQL authentication information or you need to append the user name to the parameters of the procedure.)

- On the Application Side - Manually coding all service/database calls to append this information.

- Use a SQL Server log auditing tool like LogExplorer that tracks the SQL Server transaction log.

- Leveraging your data access layer's update point to append this information before the update takes place.

Below you can find my code which takes the last approach - and uses reflection to stamp records with when a record was created/updated and who did the insert/update. This is a simplified alternative to an audit solution (such as http://blog.matthidinger.com/2008/05/09/LINQToSQLAuditTrail.aspx) which has a full audit table and where requirements are just to display who last modified or created a particular record in your application:

/// <summary>

/// Basic Audit User and Date Stamp Functionality

/// </summary>

/// <param name="failureMode"></param>

public override void SubmitChanges(ConflictMode failureMode)

{

//Updates

for (int changeCounter = 0; changeCounter < this.GetChangeSet().Updates.Count; changeCounter++)

{

object modifiedEntity = this.GetChangeSet().Updates[changeCounter];

SetAuditStamp(this, modifiedEntity, ChangeType.Update);

}

//Inserts

for (int changeCounter = 0; changeCounter < this.GetChangeSet().Inserts.Count; changeCounter++)

{

object modifiedEntity = this.GetChangeSet().Inserts[changeCounter];

SetAuditStamp(this, modifiedEntity, ChangeType.Insert);

}

base.SubmitChanges(failureMode);

}

/// <summary>

/// For Inserts or Updates - set the user and date stamps

/// </summary>

/// <param name="context"></param>

/// <param name="modifiedEntity"></param>

private void SetAuditStamp(DataContext context, object modifiedEntity, ChangeType changeType)

{

string userName = System.Threading.Thread.CurrentPrincipal.Identity.Name;

const string Created = "Created", CreatedBy = "CreatedBy",

Modified = "Modified", ModifiedBy = "ModifiedBy";

if (changeType == ChangeType.Insert)

{

SetAuditValue(modifiedEntity, Created, System.DateTime.Now);

SetAuditValue(modifiedEntity, CreatedBy, userName);

}

else if (changeType == ChangeType.Update)

{

SetAuditValue(modifiedEntity, Modified, System.DateTime.Now);

SetAuditValue(modifiedEntity, ModifiedBy, userName);

}

}

/// <summary>

/// The type of modifications

/// </summary>

private enum ChangeType

{

Update,

Insert

}

/// <summary>

/// Set target value if it exists on the object

/// </summary>

/// <param name="modifiedEntity"></param>

/// <param name="fieldName"></param>

/// <param name="propertyValue"></param>

private void SetAuditValue(object modifiedEntity, string fieldName, object propertyValue)

{

if (modifiedEntity.GetType().GetProperty(fieldName) != null) //Set current user and time stamp

{

modifiedEntity.GetType().GetProperty(fieldName).SetValue(modifiedEntity, propertyValue, null);

}

}

Friday, 16 May 2008

Microsoft SQL Server 2005 Express Edition with Advanced Services (aka with Reports) - Now Available

The spiel for this new download is:

"Free to download, free to deploy, and free to redistribute as an embedded part of an application, SQL Server Express with Advanced Services is the fast and easy way to develop and manage data-driven applications with powerful built-in reporting and full-text search functionality."

You can now find the answer to you redistributable reporting and full-text indexing issues right here: http://msdn.microsoft.com/en-us/express/aa718379.aspx

Thursday, 15 May 2008

Automatically Generating Audit Columns for your Database - no monkey work!

Open a query on your database in SQL 2005 Management Studio. Change your output mode to "text", and then run it by pressing F5. The output window will have your script to run for all tables in your database - table auditing heaven!

SELECT 'ALTER TABLE ' + TABLE_NAME + ' ADD Modified datetime NOT NULL DEFAULT GETDATE(),

ModifiedBy nvarchar(255) NOT NULL DEFAULT USER_NAME(),

Created datetime NOT NULL DEFAULT GETDATE(),

CreatedBy nvarchar(255) NOT NULL DEFAULT USER_NAME() ' FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_TYPE = 'BASE TABLE'

AND TABLE_NAME <> 'sysdiagrams'

Friday, 9 May 2008

Resetting an Identity Field to 0 when testing a data migration process

Never fear - It IS possible to reset the identity seed value by using the "DBCC CHECKIDENT" command. For example, to reset a table so that the next identity values is 1, you can run the following as part of your data migration script:

DBCC CHECKIDENT('TableName', RESEED, 0)

This will force the next value generated to be 1. Also note that the DBCC CHECKIDENT command even works with fully qualified names for the tablename parameter. For example:

ALTER PROCEDURE [dbo].[proc_InitializePrimaryTables]

AS

BEGIN

-- SET NOCOUNT ON added to prevent extra result sets from

-- interfering with SELECT statements.

SET NOCOUNT ON;

-- Insert statements for procedure here

TRUNCATE TABLE InvestmentManagementDataMigration..OpportunityStatusHistory

DBCC CHECKIDENT('InvestmentManagementDataMigration..OpportunityStatusHistory', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..OpportunityUserRole

DBCC CHECKIDENT('InvestmentManagementDataMigration..OpportunityUserRole', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..FundInterest

DBCC CHECKIDENT('InvestmentManagementDataMigration..FundInterest', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..OpportunitySourceContact

DBCC CHECKIDENT('InvestmentManagementDataMigration..OpportunitySourceContact', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..AssetOwnership

DBCC CHECKIDENT('InvestmentManagementDataMigration..AssetOwnership', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..AssetRelationship

DBCC CHECKIDENT('InvestmentManagementDataMigration..AssetRelationship', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..Opportunity

DBCC CHECKIDENT('InvestmentManagementDataMigration..Opportunity', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..Contact

DBCC CHECKIDENT('InvestmentManagementDataMigration..Contact', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..Company

DBCC CHECKIDENT('InvestmentManagementDataMigration..Company', RESEED, 0)

DELETE FROM InvestmentManagementDataMigration..Address

DBCC CHECKIDENT('InvestmentManagementDataMigration..Address', RESEED, 0)

END

Trivia:

Just to be tricky, I tried setting the seed to a negative value (-1) just to see what this would do...and the next value generated was 10. I don't know about you, but last time I checked, 10 does not follow -1!

Monday, 28 April 2008

LINQ Group By Syntax for Grouping on Multiple Columns (another peculiarity of syntax in LINQ)

It is an oversight by the folks at Microsoft that LINQ does not have a built-in group by operator that supports multiple group by columns. For example, I found it unusual that LINQ does not support multiple grouping statements like so:

from f in FundUserRoles

group f by f.RoleId, f.UserName into myGroup

where myGroup.Count() > 0

select new {myGroup.Key, ProductCount = myGroup.Count()}

If you try to run this query, you will get a syntax error on the second line. You cannot just add items to the group by clause like this. Instead, to get this group by working, you must use anonymous types and do the following:

from f in FundUserRoles

group f by new {f.RoleId, f.UserName}

into myGroup

where myGroup.Count() > 0

select new { myGroup.Key.RoleId, myGroup.Key.UserName, FundCount = myGroup.Count()}

Using Regular Expressions for Simple Code Generation in UltraEdit

My task – I wanted to do a multiple column join to determine if any updates had been made to a table (compared to a table which contained the original Excel import). Now instead of doing the hard work and going through all 70 columns and manually typing each table name in e.g. Table1.ColumnName = Table2.Column name for every column, I used the following shortcut:

- Drag the columns folder from the treeview to the working area in SQL 2005 WorkBench so you have a list of comma-separated columns (e.g. RecordNumber, Information, RecordType, DocAuthor)

- Copy this into my the text editor, UltraEdit (make sure you have Advanced -> Configuration -> Search -> Regular Expression Engine set to “Unix Style” as used in .NET rather than “Ultra Edit Style”)

- Ctrl+R to open the replace dialog and put in “\W*(\w*),” to the Find box and “Table1.\1 = Table2.\1 AND\n” in the Replace Box.

- Do the replace and you will now have a properly formatted join expression for use in your queries. i.e.

Table1.RecordNumber = Table2.RecordNumber AND

Table1.Information = Table2.Information AND

Table1.RecordType = Table2.RecordType,

You can now put the joins back into your query:

SELECT

*

FROM

dbo.OpportunityRawImport_Stage1Load as Stage1

INNER JOIN

dbo.OpportunityRawImport_Stage2Load as Stage2

ON

Stage1.RecordNumber = Stage2.RecordNumber AND

Stage1.Information = Stage2.Information AND

Stage1.RecordType = Stage2.RecordType AND

Handy Tools for Building Regular Expressions:

A free regular expression editor with English interpretations of your regular expressions (The Regular Expression Workbench) can be found at:

http://blogs.msdn.com/ericgu/archive/2003/07/07/52362.aspx. For example, a regular expression for Australian Postcodes ("^[\d]{4,4}$". Is interpreted as:

^ (anchor to start of string)

Any character in "\d"

At least 4, but not more than 4 times

$ (anchor to end of string)

Friday, 16 November 2007

The old schema refresh problem with SQL Server 7.0 still exists in SQL 2005

if exists (select * from dbo.sysobjects where id = object_id(N'[dbo].[Client]') and OBJECTPROPERTY(id, N'IsUserTable') = 1)

drop table [dbo].[Client]

GO

CREATE TABLE [dbo].[Client] (

[ClientID] [int] IDENTITY (1, 1) NOT NULL ,

[Name] [varchar] (50) NULL ,

) ON [PRIMARY]

GO

if exists (select * from dbo.sysobjects where id = object_id(N'[dbo].[vwClient]') and OBJECTPROPERTY(id, N'IsView') = 1)

drop view [dbo].[vwClient]

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_NULLS ON

GO

CREATE VIEW dbo.vwClient

AS

SELECT dbo.Client.*

FROM dbo.Client

GO

SET QUOTED_IDENTIFIER OFF

GO

SET ANSI_NULLS ON

GO

BEGIN TRANSACTION

SET QUOTED_IDENTIFIER ON

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE

SET ARITHABORT ON

SET NUMERIC_ROUNDABORT OFF

SET CONCAT_NULL_YIELDS_NULL ON

SET ANSI_NULLS ON

SET ANSI_PADDING ON

SET ANSI_WARNINGS ON

COMMIT

BEGIN TRANSACTION

ALTER TABLE dbo.Client ADD

DateUpdated datetime NULL

GO

COMMIT

SELECT * FROM vwClient

Even worse, if you remove a column, you will get runtime errors guaranteed if you don't recreate all dependant views. To fix this, you should perform an sp_refreshview 'viewname' on the views whose underlying tables have been updated - or you can manually trigger this by going to design view of the view, adding a space to the query definition and clicking OK.

The simplest way to do this is for all views is to use the following stored procedure to detect any changes or problems:

if exists (select * from dbo.sysobjects where id = object_id(N'[dbo].[procRefreshAllViews]') and OBJECTPROPERTY(id, N'IsProcedure') = 1)

drop procedure [dbo].[procRefreshAllViews]

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_NULLS OFF

GO

CREATE PROC dbo.procRefreshAllViews

AS

SET quoted_identifier off

DECLARE @ObjectName varchar (255)

DECLARE @ObjectName_header varchar (255)

DECLARE tnames_cursor CURSOR FOR SELECT name FROM sysobjects

WHERE type = 'V' AND uid = 1 Order By Name

OPEN tnames_cursor

FETCH NEXT FROM tnames_cursor INTO @Objectname

WHILE (@@fetch_status <> -1)

BEGIN

IF (@@fetch_status <> -2)

BEGIN

SELECT @ObjectName_header = 'Refreshing ' + @ObjectName

PRINT @ObjectName_header

EXEC('sp_refreshview ' + @ObjectName)

END

FETCH NEXT FROM tnames_cursor INTO @ObjectName

END

PRINT ' '

SELECT @ObjectName_header = 'ALL VIEWS HAVE BEEN REFRESHED'

PRINT @ObjectName_header

DEALLOCATE tnames_cursor

GO

SET QUOTED_IDENTIFIER OFF

GO

SET ANSI_NULLS ON

GO

This problem can be detected easily with SSW SQL Auditor which you can get from http://www.ssw.com.au/ssw/sqlauditor/

Wednesday, 7 November 2007

How to concatenate strings from mutliple rows into one in SQL Server 2000 or 2005

1: 2: CREATE TABLE Colors

3: ( 4: Color VARCHAR(32)

5: ) 6: GO

7: SET NOCOUNT ON

8: INSERT Colors SELECT 'red'

9: INSERT Colors SELECT 'orange'

10: INSERT Colors SELECT 'blue'

11: INSERT Colors SELECT 'green'

12: GO

13: DECLARE @colors VARCHAR(1024)

14: SELECT

15: @colors = COALESCE(@colors + ',', '') + Color

16: FROM

17: Colors 18: SELECT Colors = @colors

19: GO

20: DROP TABLE Colors

21: GOThanks to http://databases.aspfaq.com/general/how-do-i-concatenate-strings-from-a-column-into-a-single-row.html

Wednesday, 5 September 2007

Dynamic SQL WHERE Clauses without sp_executesql

I was asked again today about doing dynamic where clauses without using sp_executesql. See below for my previous answer (19/10/2005). The only thing I would suggest now is to improve my suggestion below is to make the statements even more readable with the ISNULL() statment:

e.g. AND USERNAME >= isnull(@FromUser , uSERNAME). See below for more details.

---ORIGINAL RESPONSE---

I used this a few years ago at Kanes Hire, but I found it quite useful again at Multibase:

If you have a search screen, but want to show ALL records if a parameter is NULL, you can use the following (without IF…THEN conditional logic):

(The trick is that the case statements always resolve to true when the parameter is NULL) – so the criteria is effectively ignored, just like an “ALL”. Seems to perform well on the small queries I used here.

SQL:

AND UserName >= CASE WHEN @FromUser IS NULL THEN UserName ELSE @FromUser END

CREATE PROCEDURE up_StampingReport

@FromDate datetime,

@ToDate datetime,

@FromUser varchar(255),

@ToUser varchar(255),

@IsOutsideSLA varchar(255),

@Rework_Count int,

@TransactionType_ID int,

@IsMatterWithClient char(1),

@IsMatterWithPerpetual char(1)

AS

SELECT *

FROM VW_STAMPREPORT

WHERE

(Expected_datetime >= @FromDate AND Expected_datetime <= @ToDate) AND UserName >= CASE WHEN @FromUser IS NULL THEN UserName ELSE @FromUser END

AND UserName <= CASE WHEN @ToUser IS NULL THEN UserName ELSE @ToUser END AND IsOutsideSLA = CASE WHEN @IsOutsideSLA IS NULL THEN IsOutsideSLA ELSE @IsOutsideSLA END AND Rework_Count = CASE WHEN @Rework_Count IS NULL THEN Rework_Count ELSE @Rework_Count END AND TransactionType_Id = CASE WHEN @TransactionType_ID IS NULL THEN TransactionType_Id ELSE @TransactionType_ID END AND IsMatterWithClient = CASE WHEN @IsMatterWithClient IS NULL THEN IsMatterWithClient ELSE @IsMatterWithClient END AND IsMatterWithPerpetual = CASE WHEN @IsMatterWithPerpetual IS NULL THEN IsMatterWithPerpetual ELSE @IsMatterWithPerpetual END GO

Sunday, 12 August 2007

The remote name could not be resolved (Microsoft.SqlServer.Management.UI.RSClient)

In order to resolve the error you need to ensure that the element http://localhost/reportserver in the rsreportserver.config is configured to the correct computer name.

By default the rsreportserver.config file is in the directory C:\Program Files\Microsoft SQL Server\MSSQL.3\Reporting Services\ReportServer\.

David Klein www.ssw.com.au